|

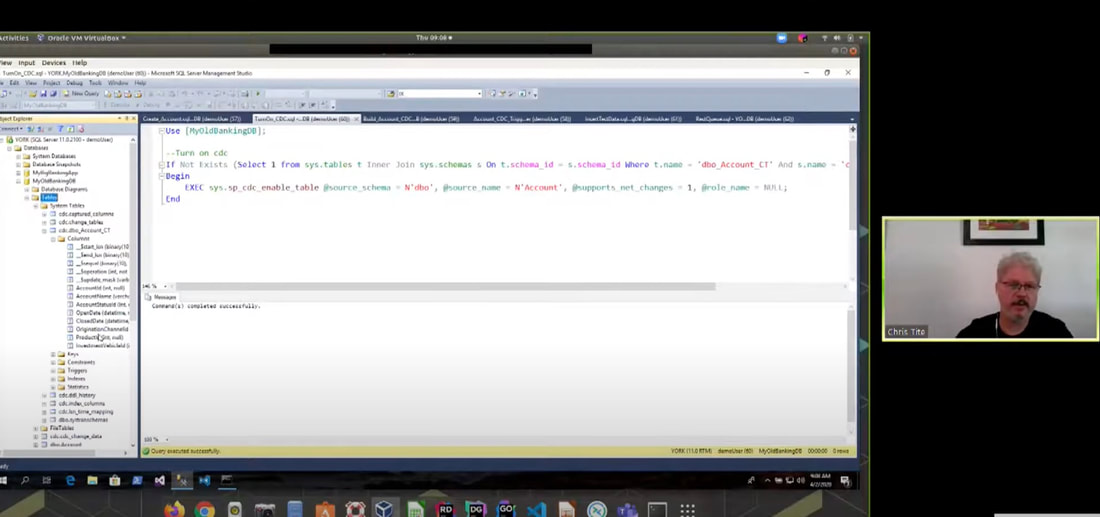

Event Streaming is a powerful software architecture pattern which when applied to enterprise systems can create flexible, decoupled systems. Often when working with Legacy Systems we are not afforded the opportunity to leverage flexible design patterns and the latest technology to extend our architectures. Chris shares how we leveraged obscure technology in SQL to provide a stream of data events which were pushed into event streaming technologies like Kafka and Event Store, helping us create a decoupled enterprise system. Chris walks you through how we implemented this approach on a legacy system; setting out the principles behind the approach and walking you through the code. Starting with a legacy SQL database he introduces you to the often not talked about features that empower the developer to hook into SQL replication events to provide instant access to data events. Using these SQL events we'll turn the data into a json stream making it available for Kafka or Event Store to consume. Next, you will fire up a Kafka Docker container and configure Kafka topics for event streaming, exposing event streams which newer parts of the system can consume. Since Kafka is a powerful, transient event streaming technology we consider a more persistent event streaming technology like Event Store. Chris demonstratesanother Docker container, configure a series of event streams and shows the power of Event Store projections to create a decoupled architecture. Finally, Chris contrasts the two event streaming technologies to find the best fit for architecting change in legacy systems. Event Streaming in a legacy system shouldn't be difficult - here are ideas on how to do this.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

KaleidocodingWhat is happening at Kaleidocode? Archives

March 2024

Categories |

Company |

SERVICES |

|

RSS Feed

RSS Feed